Export Web Tables to JSON for Pandas — Skip the Scraping

Writing a BeautifulSoup scraper for a simple table export? There's a faster way. Export HTML tables directly to JSON and load them into Pandas quickly.

Use HTML Table Exporter to export any web table to JSON, then load with pd.read_json('table.json'). Skip the scraping entirely.

Why JSON over CSV?

CSV is great, but JSON has advantages for Python workflows:

- Type preservation: Numbers stay numbers, strings stay strings

- Nested data: JSON handles complex structures that CSV can't

- Direct to dict: JSON loads directly as Python dictionaries

- API compatibility: Same format as most web APIs

For quick data analysis, CSV works fine. For building pipelines or working with APIs, JSON is often cleaner.

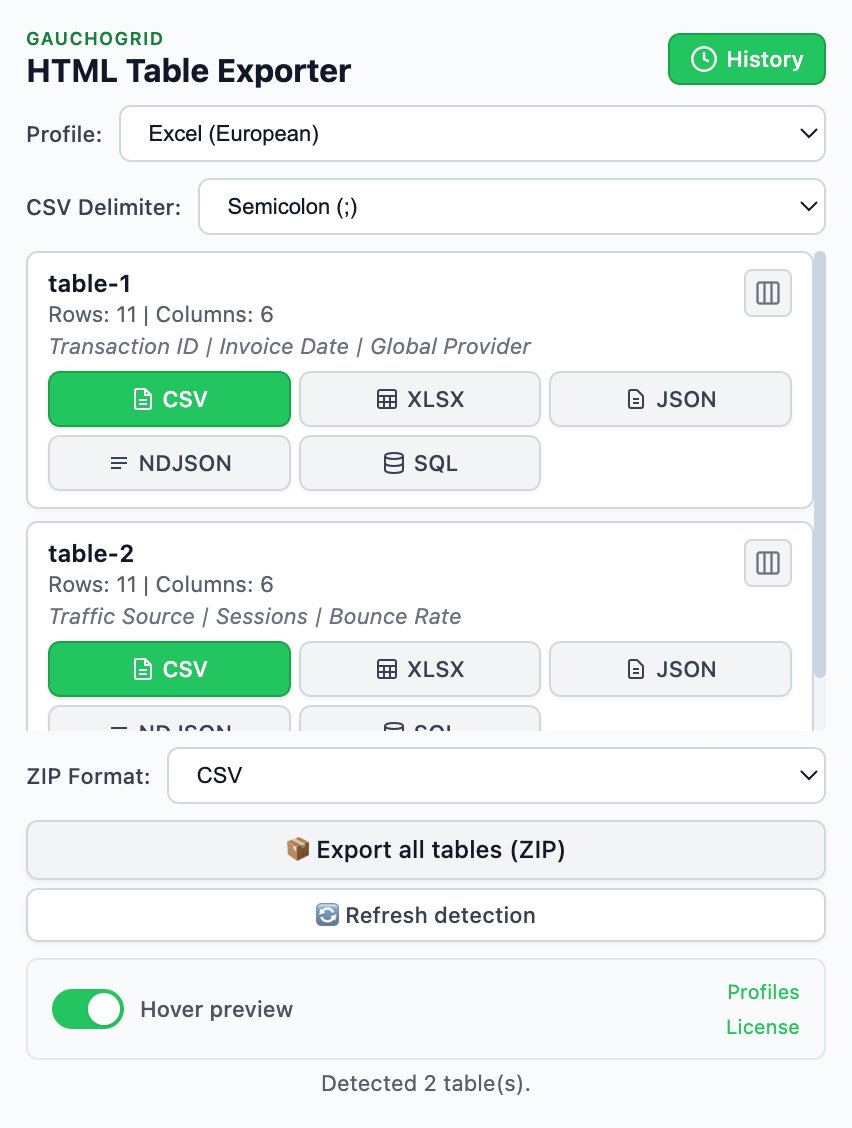

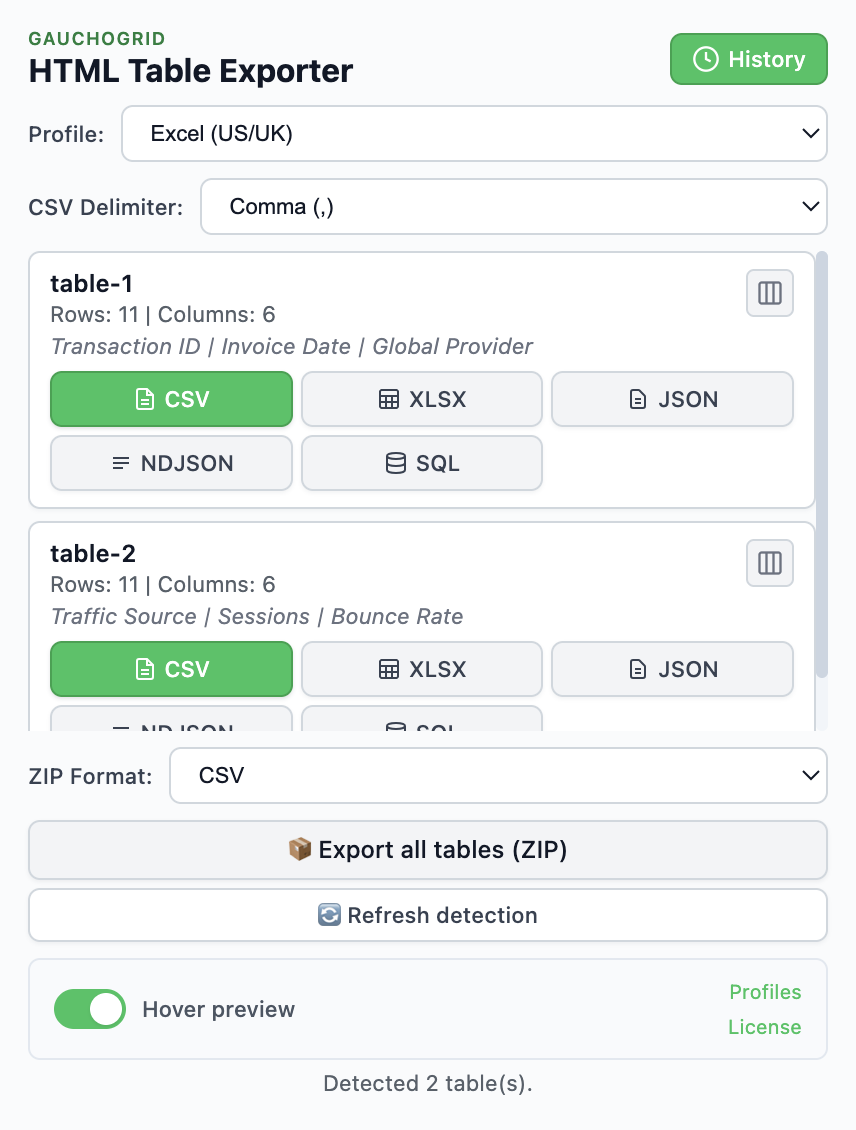

Exporting HTML tables to JSON

Install the extension

Add HTML Table Exporter to Chrome. Free, no account.

Navigate to your table

Go to any page with the data you need. Click on the table first. When you open the extension, that table will be marked and displayed in the popup, so you can click the export options easily without having to search for it.

Click JSON to export

Click the extension icon, find your table, click JSON. File downloads immediately.

Skip BeautifulSoup — export to JSON quickly

Loading JSON into Pandas

Once you have your JSON file, loading it into Pandas is trivial:

import pandas as pd

# Load the exported JSON

df = pd.read_json('table-1.json')

# That's it. Your data is ready.

print(df.head())

print(df.info())The JSON export uses the "records" orientation by default, which is the most compatible with Pandas:

[

{"Column1": "Value1", "Column2": "Value2"},

{"Column1": "Value3", "Column2": "Value4"}

]NDJSON for streaming and big data

For large datasets or streaming pipelines, HTML Table Exporter PRO offers NDJSON (Newline-Delimited JSON) export:

{"Column1": "Value1", "Column2": "Value2"}

{"Column1": "Value3", "Column2": "Value4"}Each line is a separate JSON object. This format works well for:

- BigQuery imports: Native format for BigQuery loading

- Streaming processing: Process line-by-line without loading entire file

- Log pipelines: Works with tools like jq, Elasticsearch, Splunk

- Large files: Memory-efficient for huge datasets

Loading NDJSON in Pandas:

df = pd.read_json('table-1.ndjson', lines=True)SQL export too

Need to insert data into a database? PRO also exports SQL INSERT statements. Just export, run in your database client, done.

When to still use web scraping

Browser extensions are great for one-off exports and small batches. Use scraping when you need:

- Automated pipelines: Data that updates daily and needs to be fetched automatically

- Hundreds of pages: Bulk extraction across many URLs

- API-like access: Programmatic control over the extraction process

- CI/CD integration: Data extraction as part of automated workflows

For exploratory analysis, one-time exports, or prototyping your data pipeline, the browser extension is faster than writing scraper code.

If you just need Excel or CSV output, the process is even simpler—see how to export HTML tables to CSV.

Skip the scraping

Export HTML tables to JSON (or NDJSON for pipelines) and load directly into Pandas. Free for basic exports.

No BeautifulSoup needed · Fast exports · Pandas-ready